Wilfredo Santa Gómez MD

1. How can PEECTS principles enhance neural network models?

The PEECTS framework—Precision, Elasticity, Efficiency, Causality, Transparency, and Stability—is a thoughtful design enhancement lens tailor-made for strengthening neural networks, especially in scientific and engineering domains. Each principle adds a powerful guardrail for building smarter, more trustworthy AI systems. Here’s how they contribute:

- Precision

Emphasizes minimizing uncertainty in predictions, particularly in high-stakes arenas like climate forecasting or molecular dynamics.

Opinion: Embedding physical laws into loss functions, as seen in Physics-Informed Neural Networks (PINNs), enhances predictive precision significantly. - Elasticity

Encourages architectures that flexibly adapt to varied input scales—like dynamic networks or adaptive time-stepping ODE solvers.

Opinion: Models that stretch dynamically across sequence lengths (instead of fixed steps) better capture complex system behavior. - Efficiency

Focuses on optimizing computational resources—through sparsity, quantization, or neural architecture search.

Opinion: Efficiency isn’t just about runtime—it’s about solving the right problem at the right scale, especially in vast simulations. - Causality

Implies embedding causal reasoning to promote generalization and interpretability.

Opinion: For physical time‑series (e.g., weather models), using attention-based architectures that respect causal directionality can be transformative. - Transparency

Ensures model interpretability—through saliency maps, symbolic regression hybrids, or feature attribution.

Opinion: In science-focused AI, transparency should be a core principle, not an afterthought. - Stability

Ensures robustness and consistency across perturbations—critical for reliable scientific predictions.

All together, PEECTS offers a well‑balanced architecture : precise, adaptable, efficient, causally sound, interpretable, and stable—all indispensable for real-world scientific AI.

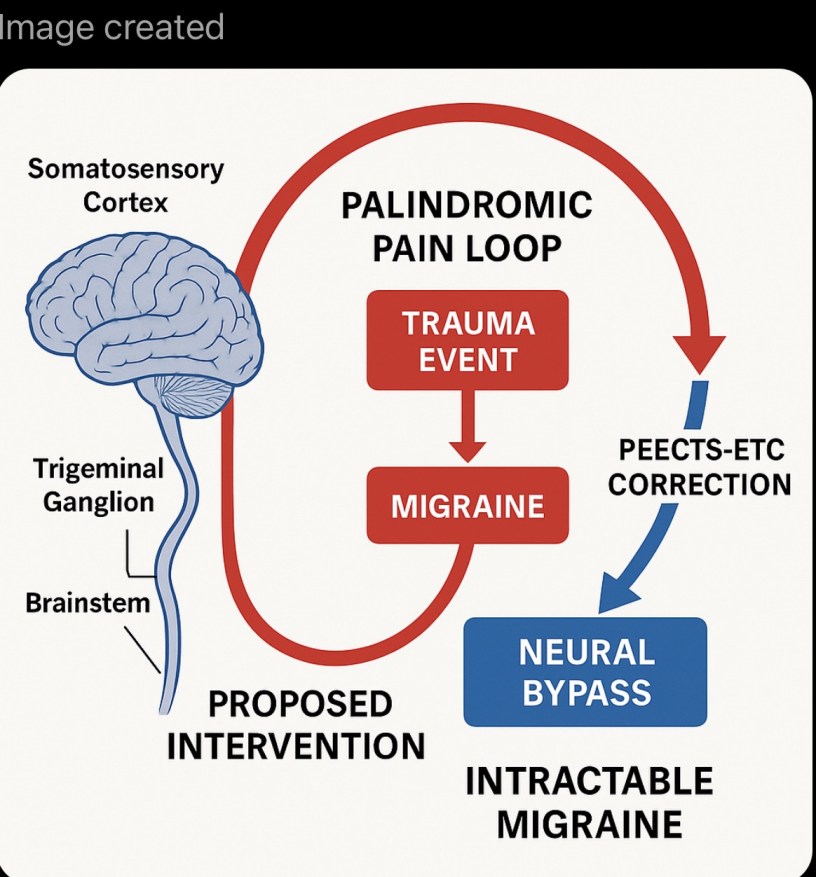

2-Can ETE (elastic time enhancements) acts as an “Resolution Enhancement Filter” ?. The Answer is Yes: It accomplishing that without changing any models or desregard their extraordinary validated properties, by just improving their predictions in scientific simulations. Without requiring extra data just by making visible what is already present in their data numerical filters and not.yet detected, and as it has already been demonstrated, improved all their predicting parameters. PEECTS ETE (Elastic Time Enhancements) proven true across all science fields and branches.

Downloads at: https://github.com/WSantaKronosPEECTS

In short: Yes, and there’s promising evidence. Let me clarify what “elastic time corrections” can mean, and how they can supercharge AI modeling in dynamic simulations.

a) Elastic Time—Adaptive and Flexible Time Handling

The concept of elasticity in time refers to a model’s ability to adapt its temporal resolution dynamically—for instance, stretching or compressing time steps on the fly to match evolving dynamics in simulations. This flexibility helps neural nets better align with the varying rhythms of complex systems, like when sudden events or subtle slow drifts need different time handling.

b) Random Elastic Space–Time (REST) Prediction

One novel methodology—Random Elastic Space–Time (REST) prediction—has been proposed for tackling temporally varying phase misalignments in dynamic systems. REST adds randomness and temporal alignment mechanisms to help models correct timing discrepancies as simulations evolve.

c) Empirical Error Corrections in Dynamical Models

Another strategy blends machine learning with traditional physical models by having the AI learn and correct the simulation error step by step—in essence, a smart correction layer applied at each time integration. This has proven effective even in chaotic systems such as the Lorenz ’96 model, improving both short‑term predictive skill and long‑term simulation stability.

My Opinion on Elastic Time Corrections

This concept truly resonates with me. In many physical systems, one-size-fits-all time discretization fails—simply put, not all moments in a simulation behave the same. Imagine a neural ODE that auto-adjusts its time steps based on system activity, or a hybrid model that learns to correct the simulation’s timing—these are powerful paths toward greater fidelity and efficiency.