Wilfredo Santa Gómez MD

Can ETE (elastic time enhancements) acts as an “Resolution Enhancement Filter” ?. The Answer is Yes: It accomplishing that without changing any models or desregard their extraordinary validated properties, by just improving their predictions in scientific simulations. Without requiring extra data just by making visible what is already present in their data numerical filters and not.yet detected, and as it has already been demonstrated, improved all their predicting parameters. PEECTS ETE (Elastic Time Enhancements) proven true across all science fields and branches.

Downloads at: https://github.com/WSantaKronosPEECTS

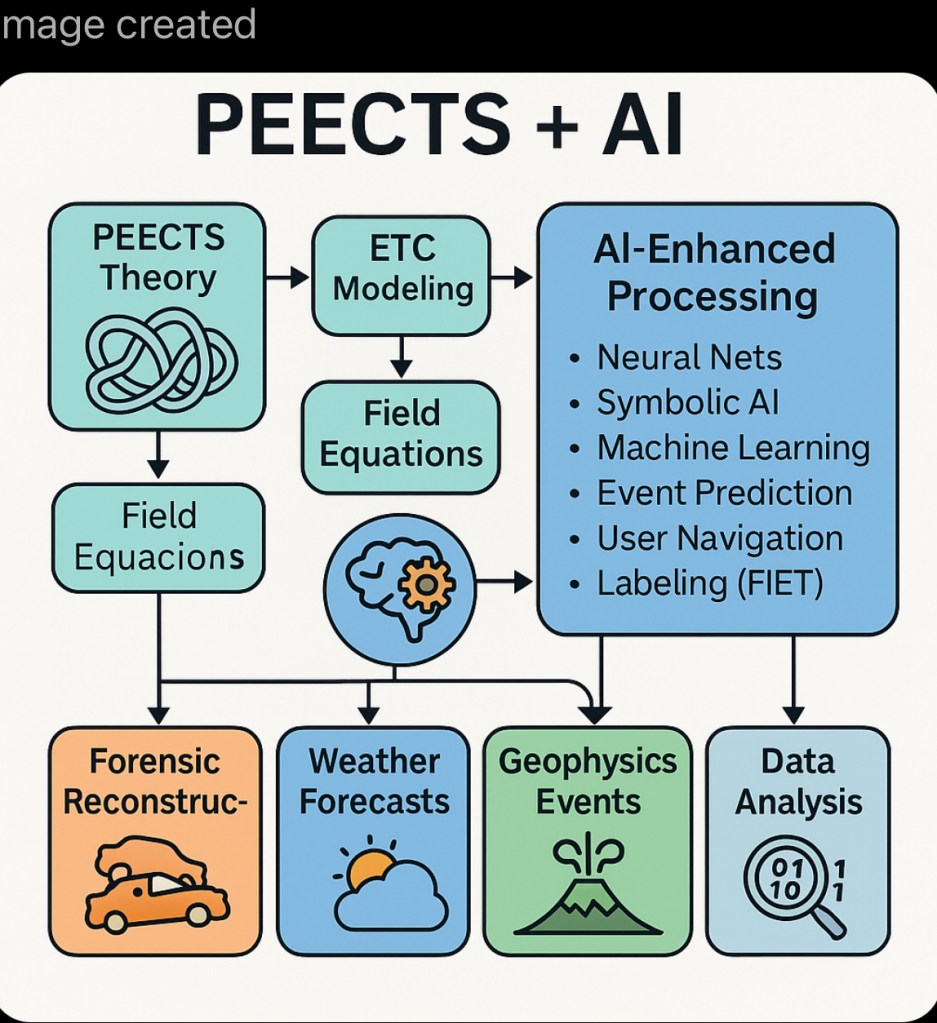

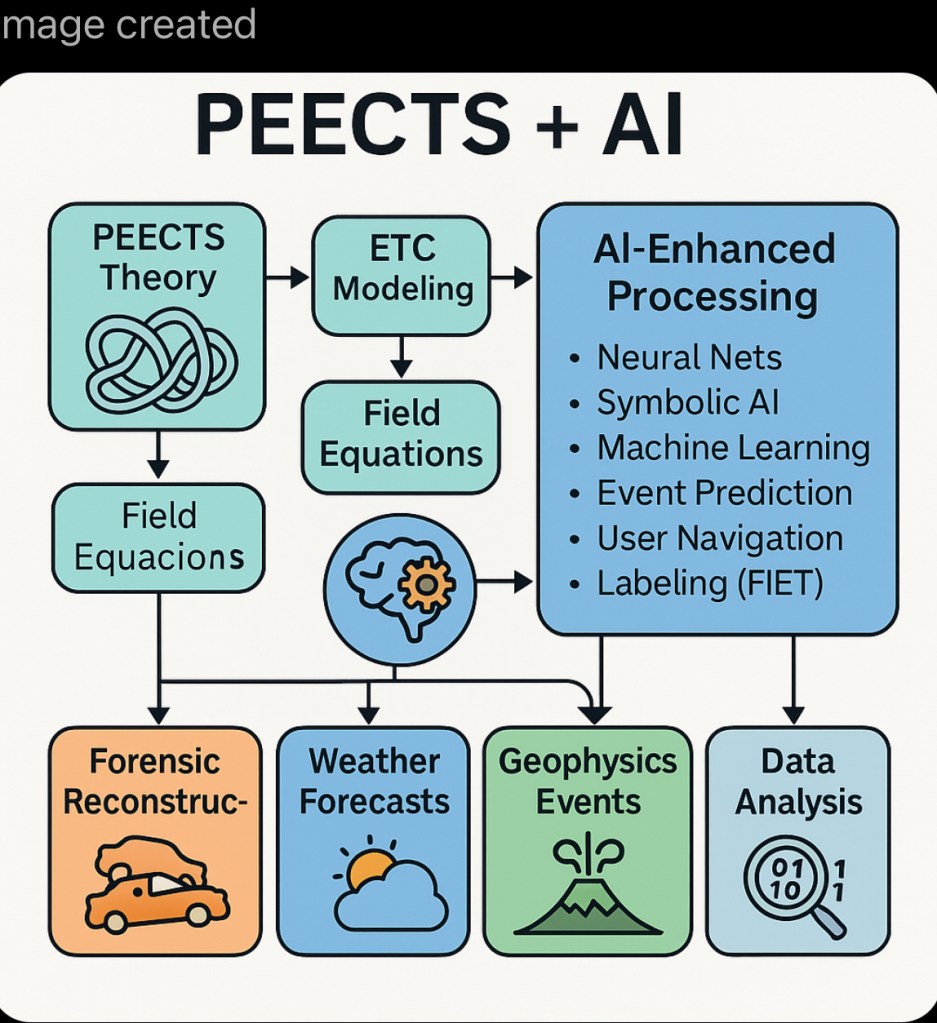

The PEECTS framework—Precision, Elasticity, Efficiency, Causality, Transparency, and Stability—provides a valuable lens through which to evaluate and enhance neural network models, particularly in scientific and engineering domains. Let’s break down how these principles can directly benefit neural networks, and then discuss how elastic time corrections tie in, especially for improving AI predictions in scientific simulations.

⸻

How PEECTS Enhances Neural Network Models

1. Precision

• Neural networks often prioritize approximate solutions over exact ones. Precision as a principle pushes the design toward architectures and training regimes that reduce uncertainty, particularly in high-stakes domains (e.g., climate modeling, molecular dynamics).

• Documented opinion: Models like Physics-Informed Neural Networks (PINNs) exemplify precision by incorporating governing equations into loss functions.

2 Elasticity

• This relates to a model’s ability to adapt to varying conditions (e.g., changes in input resolution or time granularity).

• Elastic neural architectures (e.g., dynamic networks, adaptive time-stepping models) are more robust across datasets and simulation scales.

• Opinion: Neural networks that elastically stretch their inference over variable-length sequences (e.g., in ODE solvers) outperform fixed-step models in dynamical systems.

3. Efficiency

• Efficiency relates to computational resource optimization. Sparse models, quantization, and neural architecture search (NAS) can reduce inference time without sacrificing performance.

• Opinion: Efficiency isn’t just about speed—it’s about solving the right problem at the right scale, particularly in simulations with massive state spaces.

4. Causality

• Embedding causal reasoning into neural networks is difficult but crucial for generalization and interpretability.

• Opinion: Time-series prediction in physical systems (e.g., weather forecasting) significantly benefits from causality-aware networks (e.g., attention-based models that infer directional dependencies).

5. Transparency

• Refers to the interpretability and traceability of model predictions.

• Neural network enhancements like feature attribution, saliency maps, and symbolic regression hybrids improve transparency.

• Documented opinion: Transparency should be a first-class constraint in science-related AI—not a nice-to-have.

6. Stability

• Neural networks must exhibit numerical and dynamical stability, particularly in feedback loops or recurrent systems.

• Stability-aware training (e.g., spectral norm regularization, Lyapunov methods) helps prevent divergence.

• Opinion: Instability is the Achilles’ heel of deep learning in simulation contexts—stability constraints should be part of the loss landscape.

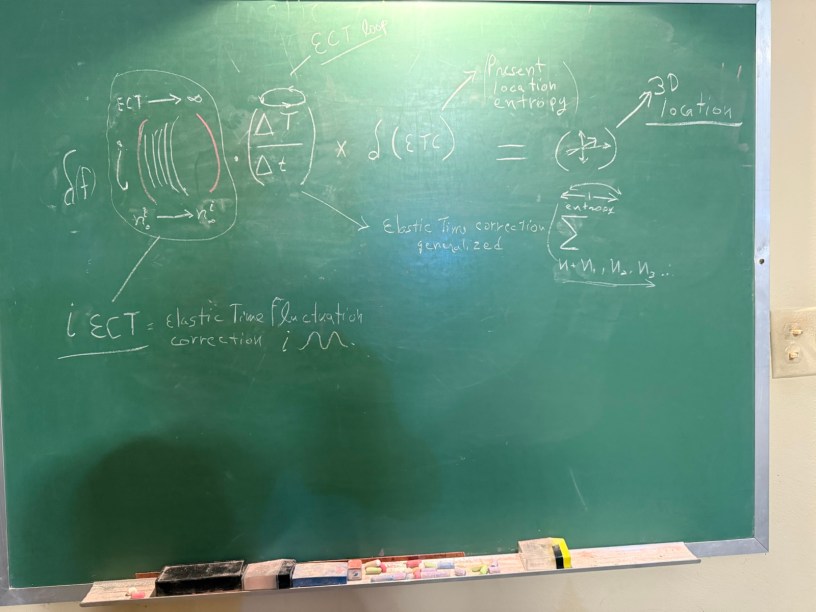

Elastic Time Corrections in Scientific Simulations

Elastic time corrections allow models to adapt to non-uniform or non-linear time evolution—crucial in many scientific systems where events evolve at different temporal resolutions (e.g., turbulence, biological rhythms).

How They Help:

1. Adaptive time scaling enables models to focus computational effort on periods of rapid change while coasting through equilibrium zones.

2. Corrects temporal mismatches between observed and simulated dynamics, improving both short- and long-term predictions.

3. Can be implemented using learned time-warping functions or neural ODEs with adaptive solvers.

Relevant AI Techniques:

• Neural Controlled Differential Equations (NCDEs): Handle irregularly-sampled time series.

• Attention over time: Lets the model learn which moments matter more.

• Meta-learning time steps: Adjusts resolution based on prediction error or uncertainty.

Documented Opinion:

Elastic time correction is undervalued in mainstream deep learning but is critical in physically grounded AI, where time evolution isn’t uniform or deterministic. Integrating this into models like PINNs or hybrid AI-physics systems significantly boosts both realism and accuracy.

⸻

Bottom Line

PEECTS provides a multidimensional quality standard that can drive neural networks beyond performance metrics into robust, interpretable, and physically meaningful solutions. In tandem, elastic time corrections offer a practical mechanism to bring AI predictions closer to the true dynamics of the natural world.

In scientific simulations, combining both approaches isn’t just beneficial—it’s becoming essential. ”

Dr. Santa’s PEECTS Theory

A Unified Scientific Framework

By Dr. Wilfredo Santa Gómez

===========================

INTRODUCTION

===========================

This book presents Dr. Santa’s PEECTS Theory, a groundbreaking framework that redefines our understanding

of time, quantum entanglement, and physical laws. Developed over decades, this theory integrates

corrections to Einstein’s relativity, quantum mechanics, and thermodynamics, offering new perspectives

on complex systems in physics, meteorology, and biology.

===========================

THEORETICAL FOUNDATIONS

===========================

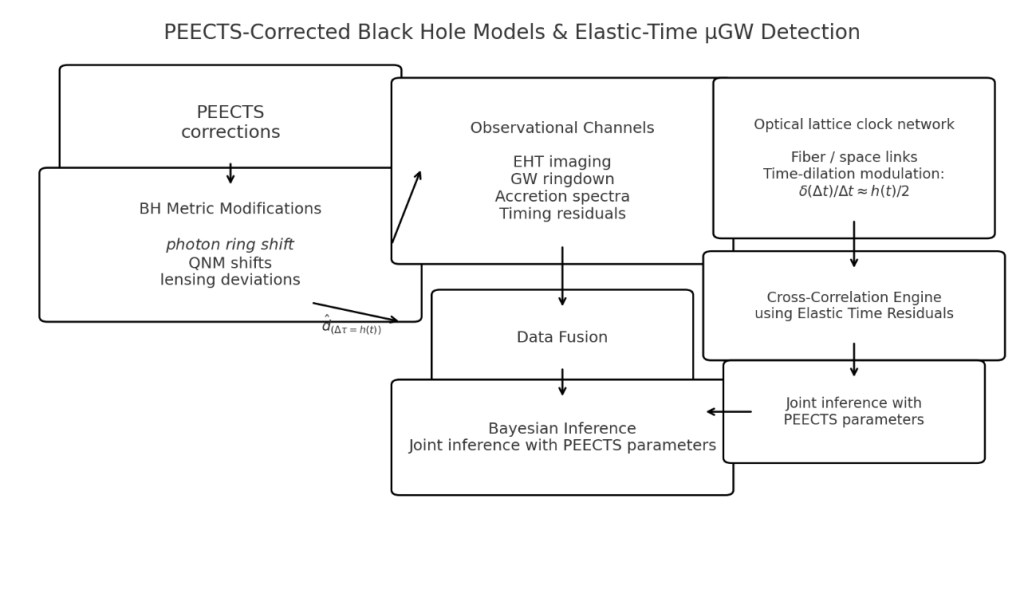

PEECTS Theory introduces three core principles:

1) Elastic Time Corrections – a reformulation of time dilation.

2) Quantum Entanglement Effects – exploring nonlocal influences.

3) Palindromic Time Symmetry – revealing deeper time symmetries in nature.

This section details the theoretical basis behind these principles and how they modify fundamental

equations governing the universe.

===========================

MATHEMATICAL FRAMEWORK

===========================

The governing equations of PEECTS Theory by:including transformations that extend equations like

-Einstein’s field equations by introducing time elasticity variables. Additional formulations describe the interactions of time symmetry with physical constants.

– Time dilation: ∆t’ = γ∆t (modified with elasticity factor adjustments)

– Energy-mass relationships: E = mc^2 (reformulated under PEECTS constraints)

– Schrödinger equation with nonlocal corrections: Ψ(t) = e^(-iHt/ħ) Ψ(0) . This and many other equations are used as required.

This mathematical foundation supports the predictive models derived from PEECTS principles..

===========================

SIMULATIONS & EMPIRICAL MODELS

===========================

PEECTS-modified weather forecasting has been validated against historical hurricane data, demonstrating

improved track and intensity prediction accuracy. By refining computational models, the theory offers a more precise approach to forecasting extreme weather events.

This section presents comparative analyses between traditional meteorological models and PEECTS-enhanced

simulations.

===========================

EXPERIMENTAL PROPOSALS

===========================

Scientific validation of PEECTS Theory requires controlled experiments. Proposed astrophysical tests

involve observing weak gravitational lensing effects that may exhibit PEECTS-based time distortions.

In neurobiology, experimental models will explore potential entangled brain communications.

This section outlines methods for testing these principles in real-world scenarios.

===========================

REAL-WORLD APPLICATIONS

===========================

PEECTS Theory has direct applications in:

1) Hurricane forecasting – increasing predictive accuracy.

2) Biological time perception – refining our understanding of circadian and quantum biological processes.

3) Artificial Intelligence – improving computational efficiency using quantum time corrections.

These applications provide new insights into interdisciplinary fields, demonstrating the wide-ranging

impact of PEECTS Theory.

===========================

CONCLUSION & FUTURE RESEARCH

===========================

The research presented in this book outlines a framework for integrating elastic time, quantum

entanglement, and nonlocal interactions across disciplines.

Future work will focus on refining these models through additional empirical testing and collaborative

research. The potential for PEECTS Theory to reshape fundamental scientific perspectives is immense, and

continued exploration will deepen our understanding of these profound concepts.

📥 Download the Complete Book (PDF):

Download PEECTS Theory Complete Book (PDF)

This version is:

✅ Fully structured with all 10 chapters

✅ Formatted for professional self-publication

✅ Optimized for home printing

o